Retrieval Augmented Generation(RAG)

- Get link

- X

- Other Apps

We Know core ideas like NLP, LLM. Let's look at how we might build utilizing LLM and the deployment aspect using RAG.

Retrieval-Augmented Generation (RAG) is a technique in natural language processing that combines retrieval-based and generation-based approaches to create more accurate and informative responses. Let's break down each point:

1. RAG Architecture

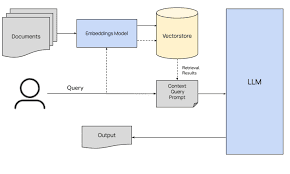

Architecture:

- Retriever: Retrieves relevant documents or passages from a large corpus based on the input query.

- Reader/Generator: Uses the retrieved documents to generate a coherent and relevant response.

- Combiner: Integrates the retrieved information with the generated response.

2. Pipeline Behind the RAG

The RAG pipeline is a collection of the above components working together:

- Query Processing: The input query is encoded into a vector.

- Retrieval: The encoded query is used to retrieve relevant documents from a vector database.

- Document Encoding: The retrieved documents are encoded into vectors.

- Information Integration: A cross-attention mechanism integrates the query and document vectors.

- Response Generation: The generator produces the final response using the integrated information.

3. Evaluation of RAG

Individual Evaluation:

- Retriever Evaluation: Measures how effectively the retriever finds relevant documents (e.g., precision, recall).

- Reader/Generator Evaluation: Assesses the quality of the generated responses (e.g., BLEU score, ROUGE score, human evaluation).

Complete Evaluation:

- End-to-End Evaluation: Evaluates the overall performance of the RAG system by considering both retrieval and generation components together (e.g., user satisfaction, task completion rate).

4. RAG vs. Fine-Tuning

RAG:

- Flexibility: Can handle diverse queries by retrieving relevant information from a large corpus.

- Scalability: Does not require extensive fine-tuning on specific datasets.

- Efficiency: Combines retrieval and generation for accurate and informative responses.

Fine-Tuning:

- Specialization: Fine-tunes a pre-trained model on a specific dataset to improve performance on targeted tasks.

- Data Dependency: Requires a large amount of annotated data for effective fine-tuning.

- Limitations: May not generalize well to unseen queries outside the fine-tuning dataset.

Detailed Breakdown of RAG (Retrieval-Augmented Generation)

RAG can be divided into three main parts: Ingestion, Retrieval, and Generation. Let's explore each part in detail:

1. Ingestion

Definition: Ingestion is the process of transforming and storing data in a database, which can be any type of database (vector, NoSQL, etc.). The data can be homogeneous (simple RAG) or heterogeneous (multimodal RAG).

Process:

- Data Collection: Collect data in various forms such as text, images, or both (for multimodal RAG).

- Transformation: Convert the collected data into embeddings (vector representations) using suitable models.

- For text data, use language models like BERT, GPT, etc.

- For image data, use models like ResNet, Inception, etc.

- Storing: Store the transformed embeddings in a database. The database operations include:

- Storing: Saving the embeddings.

- Indexing: Creating an index for efficient search and retrieval.

- Searching: Enabling quick lookup of relevant embeddings based on query similarity.

2. Retrieval

Definition: Retrieval is the process of fetching relevant data from the database based on a user query.

Process:

- Indexing: Create and maintain an index for the embeddings stored in the database to facilitate fast retrieval.

- Similarity Search: Perform a similarity search based on the user query.

- Encode the user query into an embedding.

- Compare the query embedding with stored embeddings using similarity metrics (e.g., cosine similarity).

- Ranking: Rank the retrieved data based on relevance to the user query.

- Retrieve the top-ranking similar or relevant data based on the similarity scores.

3. Generation

Definition: Generation is the process of creating a coherent and contextually relevant response using the retrieved data.

Process:

- Passing Data to LLM: Pass the top-ranking relevant data along with the user prompt to a large language model (LLM).

- Response Generation: The LLM processes the input and generates the final output.

- Integrate the retrieved relevant data with the user query to generate a coherent response.

Complete RAG Process:

Data Extraction:

- Sources: Extract data from various sources such as PDFs, text documents, images, audio files, videos, etc.

- Purpose: Gather diverse types of data to enrich the knowledge base.

Chunking:

- Objective: Divide the extracted data into smaller chunks or segments.

- Reason: To avoid exceeding the input token size limitations of large language models (LLMs) and to improve efficiency in processing.

Embedding:

- Process: Convert the chunks of data into vector representations (embeddings).

- Method: Use embedding models appropriate for each data type (e.g., BERT for text, ResNet for images).

Storing in Knowledge Base DB:

- Storage: Save the embeddings in a knowledge base database (knowledge base DB).

- Indexing: Automatic indexing of embeddings is performed within this DB to enable efficient search and retrieval.

Vector Database:

- Final Storage: Store the indexed embeddings in a vector database.

- Purpose: Ensure that embeddings are stored in a format optimized for similarity search.

Retrieval:

- Query Processing: When a user submits a query, create an embedding for this query.

- Similarity Search: Perform a similarity search in the vector database to find relevant embeddings.

- Ranking: Retrieve and rank the most relevant results based on similarity scores.

Generation:

- Data Passing: Pass the retrieved results along with the user query to the LLM.

- Response Generation: The LLM uses the provided context to generate a coherent and contextually relevant response.

When users query large language models (LLMs) like ChatGPT or Gemini, the models generate responses based on the data they were trained on. However, if users ask about recent events or future predictions—topics not covered in the training data—the LLMs might struggle to provide accurate answers. This limitation can be addressed through three main approaches:

Fine-Tuning:

- Definition: Fine-tuning involves updating a pre-trained model with additional training on a specific dataset. This dataset can include recent or specialized information that the original model wasn't trained on.

- Use Case: By fine-tuning an LLM on new, domain-specific data, the model can better handle queries about recent developments or niche topics. However, fine-tuning requires continuous updates and may not be as agile in handling rapidly changing information.

Third-Party Agents or APIs:

- Definition: Using third-party services or APIs involves integrating external sources of information into the LLM's responses. These sources can provide up-to-date data or specialized knowledge.

- Use Case: This approach allows the LLM to fetch real-time information from external systems or databases, enhancing its ability to respond to queries about current events or emerging trends. It leverages external knowledge without modifying the LLM's core model.

Retrieval-Augmented Generation (RAG):

- Definition: RAG combines the power of retrieval and generation by integrating a dynamic knowledge base with the LLM. It involves retrieving relevant information from an up-to-date database and using that information to generate more accurate responses.

- Use Case: RAG addresses the limitation of static training data by allowing the LLM to access the latest information through a retrieval process. When a user query is made, RAG retrieves relevant data from the knowledge base and incorporates it into the response generation, enabling the LLM to handle questions about recent or future data effectively.

- Get link

- X

- Other Apps

.png)

.png)

Comments

Post a Comment